On Wednesday, Stability AI unveiled a new set of free and open-source language models for artificial intelligence. Stability’s Stable Diffusion open-source image synthesis model, introduced in 2022, had a catalytic effect that the company hopes to replicate. It is possible that an open-source replacement for ChatGPT might be developed using StableLM with some further work.

According to Stability, the alpha versions of StableLM for models with 3 billion and 7 billion parameters are now accessible on GitHub. Models with 15 billion and 65 billion parameters will be released in the near future. The models will be made available by the corporation under the Creative Commons BY-SA-4.0 license, which mandates that any derivative works attribute the original author and be released under the same terms.

London-based Stability AI Ltd. has set itself up as an open-source alternative to OpenAI, which, despite its “open” name, rarely provides open-source models and maintains its neural network weights—the mass of values that form the essential functionality of an AI model—proprietary.

According to an initial blog post by Stability, “Language models will form the backbone of our digital economy, and we want everyone to have a voice in their design.” The authors write, “Models like StableLM show our dedication to AI that is open, approachable, and helpful.”

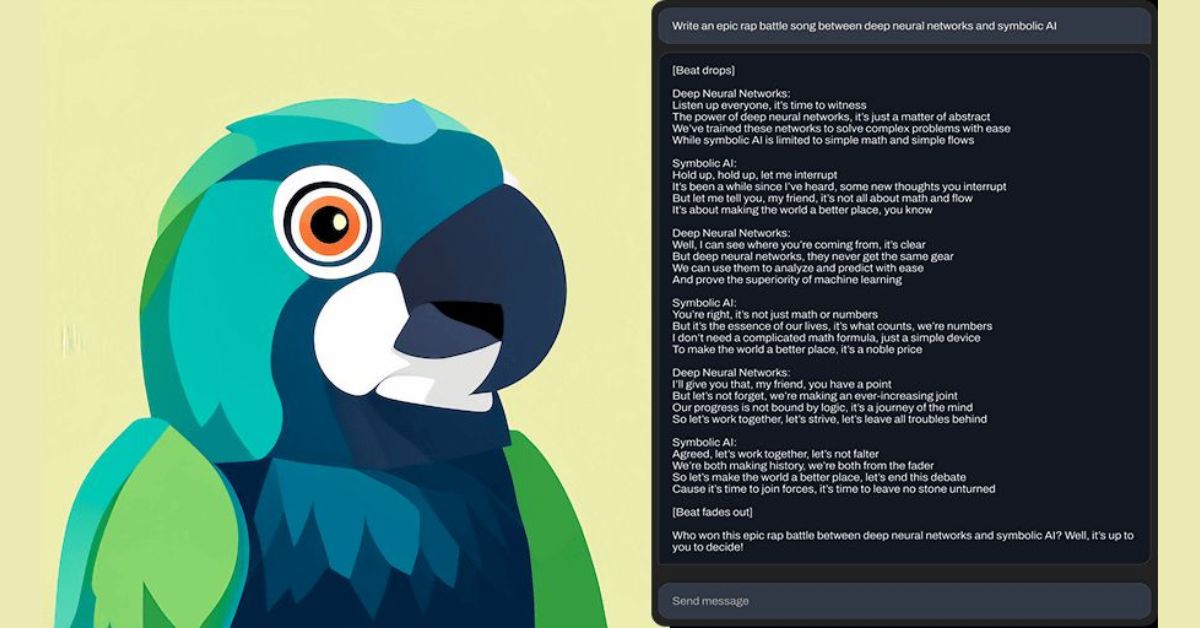

Text is generated by predicting the next token (word fragment), much like GPT-4, the large language model (LLM) that drives the most robust version of ChatGPT. To begin that process, a human provides input in the form of a “prompt.” As a result, StableLM can write code and compose the human-sounding language.

StableLM, like other recent “small” LLMs such as Meta’s LLaMA, Stanford Alpaca, Cerebras-GPT, and Dolly 2.0, claims to achieve comparable performance to OpenAI’s benchmark GPT-3 model while employing far fewer parameters (7 billion against 175 billion).

Parameters allow a language model to adapt to new information in its training set. A language model with fewer parameters is smaller and more efficient, making it more suitable for use on mobile devices. Achieving good performance with fewer parameters, however, is a huge engineering issue in the field of artificial intelligence.

For example: “Our StableLM models can generate text and code and will power a range of downstream applications,” says Stability. They show that with proper training, even compact models may yield excellent results.

1. Stability AI launches StableLM

The creators of Stable Diffusion, Stability AI, just released a suite of open-sourced large language models (LLMs) called StableLM.

This comes just 5 days after the public release of their text-to-image generative AI model, SDXL. pic.twitter.com/uhzXNzv7w1

— Rowan Cheung (@rowancheung) April 20, 2023

StableLM was reportedly trained using “a new experimental data set” that was modeled after The Pile, an open-source data collection, but was three times as large. Stability states that the “surprisingly high performance” of the model at lesser parameter sizes in conversational and coding tasks is due to the “richness” of this data collection, details of which it promises to reveal later.

Our preliminary testing with a fine-tuned version of StableLM’s 7B model designed for dialog based on the Alpaca technique showed that it looked to perform better (in terms of outputs you would expect given the prompt) than Meta’s raw 7B parameter LLaMA model. However, it still fell short of the performance of GPT-3. StableLM with more parameters may be more adaptable and powerful.

Stable Diffusion was created by the CompVis group at the Ludwig Maximilian University of Munich and released to the public as open-source software in August of last year with support and promotion from Stability.

Initiating a period of rapid advancement in image-synthesis technology, Stable Diffusion was an early open-source latent diffusion model that could generate images from prompts. There was a significant outcry from creatives and businesses alike, with some even suing Stability AI. A similar effect may be prompted by Stability’s foray into language modeling.

Hugging Face, a model with 7 billion parameters based on StableLM, and its refined version can be tested on Replicate. In addition, a StableLM implementation fine-tuned for discussion is available on Hugging Face.

Stability claims an extensive technical study on StableLM will be made available “in the near future.”